Chapter 39 \(A^+\) and \(A^-\)

Wednesday, May 5, 1993

Assume \(\Gamma = (X, E)\) is thin, distance regular of diameter \(D\geq 5\), and \(Q\)-polynomial with respect to \(E_0, E_1, \ldots, E_D\).

Fix a vertex \(x\in X\), write \(E^*_i\equiv E^*_i(x)\), \(R\equiv R(x)\), \(T\equiv T(x)\).

Pick \(y\in X\) with \(\partial(x,y) = 1\). Write \(E^*_{i,j} \equiv E^*(x)E^*(y)\), \(\delta_{ij} = E^*_{ij}\delta\), and \(\tilde{A} = E^*_1AE^*_1\).

Recall that \(\delta^+_{11}\in E^*_{11}V\) and \[R^{-1}E^*_2A_2E^*_1\hat{y} = \delta^+_{11} + \Psi(x,y)\hat{y}.\] We saw \(\Psi(x,y) = \Psi(y,x)\). We shall show below that \(\Psi(x,y)\) is independent of edge \(xy\).

Lemma 39.1 With the above notation, set \(\Psi:=\Psi(x,y)\). Then the following hold.

Proof.

\[\begin{equation} \tilde{A}\delta^+_{11} = \delta^-_{12} + \delta^-_{11} + \delta^-_{10}, \tag{39.1} \end{equation}\] \[\begin{equation} \delta^-_{12} = E^*_{12}AE^*_{11}\delta^+_{11} = -\Psi(x,y)\delta_{12} \tag{39.2}, \end{equation}\] by Lemma 38.2 \((v)\).

Also, \(\delta^-_{10} = \sigma\hat{y}\) for some \(\sigma\in \mathbb{C}\), where \[\begin{equation} \sigma = \langle \tilde{A}\delta^+_{11}, \hat{y}\rangle = \langle \delta^+_{11}, \tilde{A}\hat{y}\rangle = \langle \delta^+_{11}, \delta_{11}\rangle = \frac{a_2}{c_2}-\Psi. \tag{39.3} \end{equation}\]

Solving for \(\delta^-_{11}\) in (39.1), using (39.2) and (39.3), we have \[\begin{align} \delta^-_{11} & = \tilde{A}\delta^+_{11} - \delta^-_{12} - \delta^-_{10}\\ & = A\delta^+_{11} + \Psi \delta_{12} - \left(\frac{a_2}{c_2} - \Psi\right)\hat{y}. \end{align}\]

\[\delta^-_{11} = E^*_{11}AE^*_{11}\delta^+_{11},\] we have \(\delta^+_{11}(x,y) = \delta^+_{11}(y,x)\).

Lemma 39.2 With the above noation, \(\Psi = \Psi(u,v)\) is independent of \(u, v\), where \(u,v\in X\), with \(\partial(u,v)=1\).

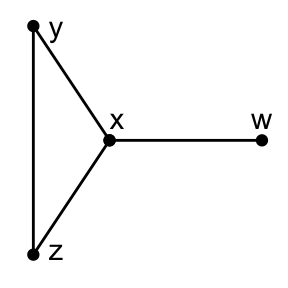

Proof. Let \(x,y\) be as above (\(x\sim y\)), and pick \(z\in X\) such that \(\partial(x,z) = 1\), but \(z\neq y\). Then it suffices to show: \[\Psi(x,y) = \Psi(x,z).\]

Case: \(\partial(y,z) = 2\).

Set \(\Delta: = \tilde{A}R^{-1}E^*_2A_2E^*_1\).

Observe: \(\Delta \in E^*_1TE^*_1\) and \(E^*_1TE^*_1\) is symmetrix by Lemma 33.4.

Hence, \(\Delta_{yz} = \Delta_{zy}\).

Since \(\Delta\in \mathrm{Mat}_X(\mathbb{R})\), \[\langle \Delta\hat{y}, \hat{z}\rangle = \langle \Delta \hat{z}, \hat{y}\rangle.\] But, \[\begin{align} \langle \Delta\hat{y}, \hat{z}\rangle & = \langle \tilde{A}\delta^+_{11} + \Psi(x,y)\hat{y}, \hat{z}\rangle \\ & = \langle \tilde{A}\delta^+_{11}, \hat{z}\rangle\\ & = \Bigl\langle \delta^-_{11} + \left(\frac{a_2}{c_2}-\Psi\right)\hat{y} - \Psi(x,y)\delta_{12}, \hat{z}\Bigr\rangle\\ & = -\Psi(x,y). \end{align}\] Note that \(\partial(x,y) = 2\) by Lemma 39.1 \((i)\).

Similarly, \[\langle\Delta\hat{z}, \hat{y}\rangle = -\Psi(x,z).\] Hence, \(\Psi(x,y) = \Psi(x,z)\).

Case: \(\partial(y,z) = 1\).

By Lemma 38.1 \((ii)\), there exists \(w\in X\) such that \[\partial(x,z) = 1, \; \partial(w,y)=2, \; \partial(w,z)=2.\]

Now,

\[\Psi(x,y) = \Psi(x,w) = \Psi(x,z)\]

from the first case.

Now,

\[\Psi(x,y) = \Psi(x,w) = \Psi(x,z)\]

from the first case.

Lemma 39.3 With the above notation, the following hold.

are both generalized adjacency matrices for the subgraph induced on the first subconstituent with respect to \(x\).

Moreover, \(A^+\), \(A^-\) have \(0\) diagonal.

Proof. Pick vertices \(y,z\in X\) such that \(\partial(x,y) = \partial(x,z) = 1\).

Show that \(A^+_{yz}\), \(A^-_{yz}\) are both \(0\) if \(\partial(y,z) = 0\) or \(2\).

Since \(A^+_{yz} = R^{-1}E^*_2A_2E^*_1\hat{y} - \Psi E^*_1\hat{y} = \delta^+_{11}\), \[A^+_{yz} = \langle A^+\hat{y}, \hat{z}\rangle = \langle \delta^+_{11},\hat{z}\rangle = 0,\] if \(\partial(y,z) = 0\) or \(2\).

Since \[\begin{align} A^-\hat{y} & = \tilde{A}A^+\hat{y} - \left(\frac{a_2}{c_2}-\Psi\right)E^*_1\hat{y} + \Psi(\tilde{J} - \tilde{A} - E^*_1)\hat{y}\\ & = \tilde{A}\delta^+_{11} - \left(\frac{a_2}{c_2}-\Psi\right)\hat{y} + \Psi\delta_{12}\\ & = \delta^-_{11}, \end{align}\] \[A^-_{yz} = \langle A^-\hat{y}, \hat{z}\rangle = \langle \delta^-_{11}, \hat{z}\rangle = 0,\] if \(\partial(y,z) = 0\) or \(2\).

Since \(E^*_1TE^*_1 = \mathrm{Span}(\tilde{J},E^*_1, \tilde{A}, \tilde{A}^2, \ldots)\) by Lemma 33.4.

\(A^+, A^-\) are both generalized matrices for the adjacency subgraph induced on the first subconstituent concerning \(x\).

Similarly, \[E^*_1TE^*_1 \ni \tilde{J}, E^*_1, \tilde{A}, A^+, A^-,\] and \(\dim E^*_1TE^*_1 \leq 5\).

Fact: With the above assumption, \[E^*_1TE^*_1 = \mathrm{Span}(\tilde{J}, E^*_1, \tilde{A}, A^+, A^-)\] (may not be independent).

Lemma 39.4 If \(\partial(x,y) = 1\), then \[T(y)\hat{y} = T(x)\hat{y}.\]

Proof. \[\begin{align} T(x)\hat{x} & = T(x)E^*_1\hat{y}\\ & = M(E^*_0+E^*_1)T(x)E^*_1\hat{y} \quad (\text{as $\Gamma$ is thin})\\ & = M\hat{x} + ME^*_1TE^*_1\hat{y}\\ & = M\hat{x} + M\mathrm{Span}(\tilde{J}, E^*_1, \tilde{A}, A^+, A^-)\hat{y}\\ & = M\hat{x} + M\mathrm{Span}(\delta_{12}+\delta_{11}+\delta_{10}, \delta_{10}, \delta_{11}, \delta^+_{11}, \delta^-_{11})\\ & = M\mathrm{Span}(\delta_{01}, \delta_{10}, \delta_{11}, \delta^+_{11}, \delta^-_{11}). \end{align}\]

But the identity of these conditions does not change if we interchange \(x\) and \(y\).

Hence, \[T(y)\hat{y} = T(x)\hat{y}.\] This proves the lemma.